The CodingGirls organised a 3-hour Web Scraping with R session. The speaker of the event was Pang Long. He is a good, dedicated and attentive speaker who can explain well throughout the event. It is important for me because I left R programming for about one year and joined this event to recap some of the R programming. The class is not for beginner who wants to learn R.

The topic, Web Scraping with R is very interesting after attended the event.

When we browse a website, sometimes we wish to download some of the information from the webpage. It can be done by saving the page with .html format. However, if you want to just list out the books list from the website, for example, instead of saving the entire webpage and slowly extract one by one, R can help you to extract it by identifying and exploiting patterns in raw html source codes and manipulate it into usable format. So, a basic HTML knowledge is important here.

Throughout the session, the speaker used this link as an example to explain how to do it, https://rpubs.com/ryanthomas/webscraping-with-rvest. It is written by Ryan Thomas.

Prerequisite:

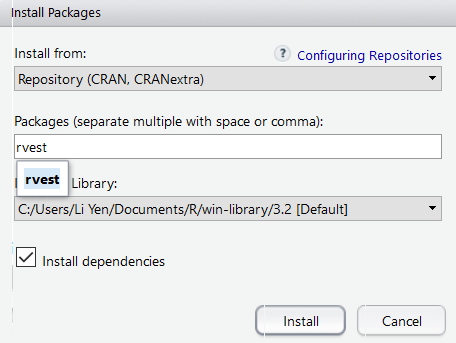

1. Install rvest

2. Install magrittr

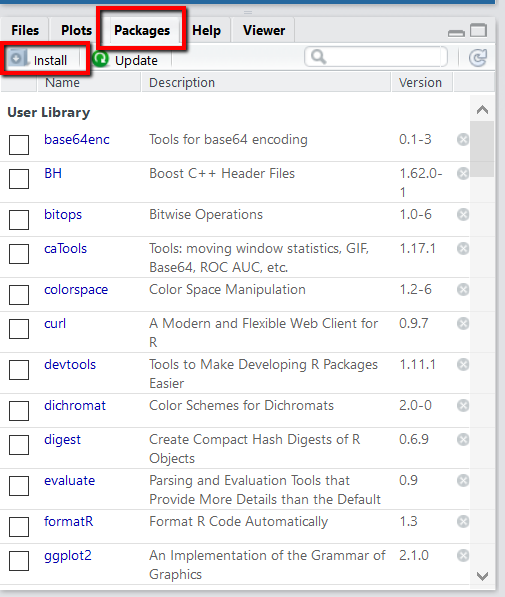

Just in case you do not know how to install a package from RStudio, look for the Package tab on the right side bar as below. There is a Install icon in which we can click and fill up the package name to install packages we want to install. If you are more familiar with syntax, you can always use,

install.packages("rvest")

install.packages("magrittr")

After the packages installed, we began to view the website. In the above link, the author gave us a project link which we can do a right-click and view the raw HTML codes. It works for HTML codes only.

Before that, we were introduced to some of the powerful rvest functions which we used throughout the session.

html_nodes(): identifies HTML wrappers.

html_nodes(".class"): calls node based on css class

html_nodes("#id"): calls node based on <div> id

html_nodes(xpath="xpath"): calls node based on xpath (we’ll cover this later)

html_attrs(): identifies attributes (useful for debugging)

html_table(): turns HTML tables into data frames

html_text(): strips the HTML tags and extracts only the text

It is important to tell R where to look for the information on the webpage based on the HTML tags.

Other functions which are useful,

gsub("^\\s+|\\s+$", "", .) %>% #strip the white space from the beginning and end of a string.print(paste0("geting data for page: " , page ))

URL <- paste0("https://scistarter.com/finder?phrase=&lat=&lng=&activity=&topic=&search_filters=&search_audience=&page=", page, "#view-projects")The output can be written into csv file.

write.csv(co.names, "RE100_2016.csv", row.names=F)